- Home

- About Us

- Recovery Services Individual RecoveryEnterprise RecoveryAdditional Recovery

- Software

- Testimonials

- Locations

Data Recovery Expert

Viktor S., Ph.D. (Electrical/Computer Engineering), was hired by DataRecoup, the international data recovery corporation, in 2012. Promoted to Engineering Senior Manager in 2010 and then to his current position, as C.I.O. of DataRecoup, in 2014. Responsible for the management of critical, high-priority RAID data recovery cases and the application of his expert, comprehensive knowledge in database data retrieval. He is also responsible for planning and implementing SEO/SEM and other internet-based marketing strategies. Currently, Viktor S., Ph.D., is focusing on the further development and expansion of DataRecoup’s major internet marketing campaign for their already successful proprietary software application “Data Recovery for Windows” (an application which he developed).

SQL FAQ – How Does A Page Level Restore Improve SQL Server Recovery Provisions?

For very large Microsoft SQL Server databases, a complete restore operation can take many hours. During this time the database cannot be used to prevent data being entered and lost as information is copied back from tape or disk. Obviously in a high-availability environment any downtime is costly, so keeping it to a minimum is essential.

Fortunately page level restore techniques can be used to keep recovery times to a minimum by reducing the amount of data that needs to copied back from the backup media. Since the release of Microsoft SQL Server 2005, DBAs have had the option of carrying out a ‘page level restore’ which allows them to recover a ‘handful’ of pages, rather than having to restore entire datasets and copy information back into the original database.

The page level restore operation is perfect for situations where data becomes corrupted during writes through a faulty disk controller, misconfigured antivirus software or an IO subsystem. Better still, restore level operations can be performed online for Enterprise editions of Microsoft SQL Server.

Prerequisites

As with any database recovery operation, page level restores are reliant on having a complete backup from which to work. If such a backup is not available, you will need to investigate an alternative method of recovering data from the server disks direct.

And although you can carry out the page level restore with the database online, you may decide to keep things safe by switching to single user mode whilst you transfer data using:

ALTER DATABASE <DBName> SET SINGLE_USER

WITH ROLLBACK AFTER 10 SECONDS

GO This command ensures that everyone is out of the system and cannot enter until you change the mode back again. You will also want to ensure that you have the end of the log file backed up so that you have all transactions fully accounted for and to prevent any further data loss:

BACKUP LOG DBName

TO DISK = N'X:\SQLBackups\DBName_TailEnd.trn'

GO Making Virtual Server Recovery-In-Place Viable

Backup technology for virtualized environments has become increasingly more advanced. Many organizations have implemented backup applications which are specifically designed to efficiently backup data in a virtualized environment without causing any disruption to application performance. In addition, some backup applications, like Veeam, now allow for data residing on a disk based backup target to be used as a boot device to support instant VM recoveries.

Boot From Backup

Generically referred to as “recovery-in-place”, this feature gives administrators the option to point a VM to the backup data residing on a disk partition (typically a backup appliance) so that a failed VM can be more quickly recovered. The idea is to use the backup data as a temporary boot area until a full data restore can be completed on to a primary storage resource.

3 Permanent Ways To Erase Your Data

In our last blog,we discussed the importance of being able to securely and permanently erase end-of-life data.Whether you’re working for a company that has legal obligations to destroy customers’ personal information after a certain timeframe, or you’re looking to sell an old smartphone on eBay and want to make sure nobody digs up your selfies, it pays to know how to do the job properly. And yet this is often a source of confusion – lots of consumers and businesses hold misconceptions about what constitutes secure data destruction and what doesn’t.

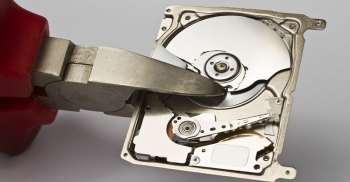

Formatting a disk, for example, won’t actually wipe it – it just removes the existing file system and generates a new one, which is analogous to throwing out a library catalogue when you really want to clear out the library itself. What’s more, taking a hammer to your hard drives is no guarantee – however unlikely – that someone with enough time on their hands won’t be able to reassemble the platters and transcribe the data.

So, how can consumers and businesses achieve peace of mind that their sensitive information won’t be coming back to haunt them after it’s been deleted? There are actually a few different fail-safe data destruction methods that have the approval of international governments and standards agencies, which vary wildly in cost and come with their own particular advantages and disadvantages. Here are three of the most important.

Method 1: Data erasure software

One of the simplest ways to permanently erase data is to use software. Hard drives, flash storage devices and virtual environments can all be wiped without specialist hardware, and the software required ranges from free – such as the ‘shred’ command bundled with most Unix-like operating systems – to commercial products.

While different data destruction applications use different techniques, they all adhere to a single principle: overwrite the information stored on the medium with something else. So, a program might go over a hard drive sector by sector and swap every bit for a zero, or else with randomly generated data. In order to ensure that no trace of the original magnetic pattern remains, this is typically done multiple times – common algorithms include Scheier seven-pass, as well as the even more rigorous, 35-pass Gutmann method.

Unfortunately, there are a few drawbacks to software-based data erasure. For one, it’s fairly time-consuming. Then, perhaps more significantly, there’s the fact that if certain sectors of the hard drive become inaccessible via normal means, the application won’t be able to write to them. Nonetheless, it’ll be possible for someone with the right tools to recover data from a bad sector.

Obviously, software-based data erasure also hits a snag when you want to destroy information stored on media that can only be written to once, such as most optical discs.

IBM Cloud Virtualized Server Recovery (VSR) – Service Introduction

With cloud service becoming ubiquitous for sever operations, it’s a natural progression for servers themselves to be backed up in the cloud

It’s been estimated that server downtown can cost a company upwards of $50,000 per minute, so the need for a server backup solution you can trust is imperative.

IBM has a very robust, efficient, and reliable solution to ensure that server downtime is kept to a minimum. Why IBM? They are an innovator in the field of disaster recovery, with more than 50 years of experience in business continuity and resiliency.

At the heart of the IBM Cloud Virtualized Server Recovery system (VSR), which keeps up-to-the-second backup copies of your protected servers in the IBM Resiliency Cloud, are bootable disk images of your servers. If an outage occurs your servers are automatically backed up via a web-based management portal that can be fully automated.