Myth No 1: there are not bad blocks on modern hard drives. Not true, they can be there and they are there. Essentially, the technology is the same as it was years ago, only improved and refined, but still not perfect (though it is unlikely that a perfect technology will be created on the basis of magnetic recording).

Myth No 2: it is not relevant for hard drives equipped with SMART (read – there couldn’t be any bad blocks). It is not true: it is relevant, not less than for hard drives without S.M.A.R.T. (if such are still available). The notion of bad sector is very native and close to it and it should have become clear from relevant articles devoted to this technology. The thing is that the majority of bad sector related troubles that were previously laid upon user have been overtaken by SMART. And often it can happen that a user is not aware at all about bad blocks on his drive, unless the situation is grave. I heard from some users that sometimes sellers used the aforementioned to justify their refusal in hard drive exchange under warranty. A seller in this case is not right at all. SMART is not almighty, and nobody so far has cancelled bad blocks. To make sense of bad blocks and their types, let’s grab digs into the information storage on hard drive just for a little bit. Lets make clear here several points.

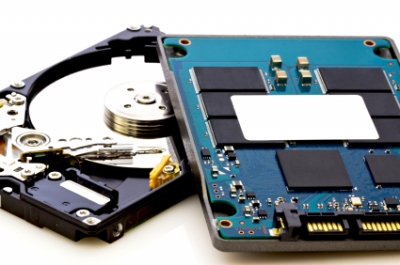

1. A unit with which hard drive operates on a low level is a sector. Not only data is recorded on a physical disk's space, corresponding to sector, but service information as well – identification fields, checksum, data and control code, error recovery code and so on (not standardized and depends on the manufacturer and model). There are two types of record according to identification fields – with or without identifier fields. The first one is old and yielded its positions in favour of the latter. Later it will become clear why I noted it. It is also important that there are error control tools (that, as we will see, can become their source).

2. When working with old hard drive you had to set in BIOS their physical parameters mentioned on the label and to unambiguously address data block you had to specify the cylinder number, number of sector on track, number of head, etc. Such work with disk was entirely dependant on its physical parameters. It was not convenient and stayed the hands of developers in many issues. A way out was desperately required and it was found in address translation. The one we are interested in is the following: it was decided to address the data on the hard drive with one parameter and a function of determining a real physical address corresponding to this parameter was laid on hard drive controller. It gave so much needed freedom and compatibility. Real physical data of hard drive was not important any more. What’s important is that the number of logical blocks specified in BIOS does not exceed the real number. Creation of such translator has a crucial significance for bad sector issues as well, and here is why: bad sectors processing on old drives wasn’t perfect and was performed by file system tools. The drive was supplied with a label containing addresses of bad blocks detected by manufacturer. User had to manually enter these data in FAT and by doing that he/she was ruling out the possibility of operating system accessing them. The technology of producing platters was not perfect then and isn't now. There is no technique for creating a perfect bad-less surface contrary to popular opinion that the drive come out from the factory without bad sectors. With the increase of drive capacity the number of bad sectors right-from-the-factory also increased and it was quite clear that manual registration of bad sectors in FAT could be carried out up to a certain point; the manufacturers had to find a way of marking bad sectors despite the file system that will be later used. Invention of translator allowed the manufacturers to solve these issues. A special protected area was allocated for the translator to be installed in where a relationship between every logical block of continuous chain and real physical address was established. If a bad block was detected on the surface it was simply ignored and the next in chain physical available block address was assigned to it. The translator was read from the drive upon powering on. It is created in the factory and that is why new disks seemingly do not contain bad blocks, not because the manufacturer applies some sort of a super technology. Physical parameters became hidden (and they varied too much because company’s hands were free to manufacture their own low-level formats and it didn’t bother users), defects were marked on the factory, versatility level has increased. As good as in fairy tale.

Now lets get back to bad blocks and their types. Depending on the nature of origin, all bad blocks can be divided into two large groups: logical and physical.

Physical and logical defects.

Surface defects can be associated with gradual magnetic coating of disks, leaked through the filter smallest dust particles, kinetic energy of which, being accelerated inside the drive to tremendous speed, is enough to damage disk surface (although, most probably they will roll down from the disk affected by centrifugal force and will be retained by internal filter, anyway they still can damage something on the way :), result of mechanical damages on impact – small particles can be knocked out and in their turn can knock out other particles and the process will take an avalanche shape (such particles will also roll down from platters affected by centrifugal forces but in this case it will take much longer and harder, since they will be pulled by magnetic forces. It also involves heads collision that floats on a very small height and will lead to overheating and performance degrading – signal alteration and as a result – read error), I have also heard (though I don’t have this statistics) that smoking near the computer can do the same effect because tobacco tars can penetrate via air filter (if it is there) and lead to heads sticking to platters (surface and heads damage), by simply accumulating on the surface and changing performance capabilities and so forth. Such sectors become inaccessible and should be excluded from addressing to. Recovery of such sectors is not possible either at home or in service centres. It would be great if at least information can be recovered from them. The speed of such surface destruction process varies from case to case. If the number of bad sectors isn’t growing or it is growing but slowly, then there is no need to worry (although it is still better to backup your data), and if the growth is fast, then you will have to replace the drive and do it quickly. In case of these particular bad blocks you can remap blocks to spare surface: it makes sense subject to absence of progressing. But it is not the subject of our discussion for now. It is if we will talk about data area. As it has been already noted, platters also store service information. When using it, it can be destructed as well, and it can be much more painful than the destruction of an ordinary user surface. The thing is that servo information is being actively utilized in the course of operation: disk rotation speed is stabilized according to servo markings, keeping head over preset cylinder regardless of external influences. Inconsiderable destruction of servo information can take place unnoticed. Serious damages of servo format can make a certain part of disk or entire disk inaccessible. Since servo information is used by the hard drive’s program and it is crucial for ensuring normal operation and in view of its specific nature it becomes rather complicated to deal with it. Some hard drives allow turning off bad servo tracks. Its recovery is possible only at the factory on special expensive and sophisticated equipment (if we estimate approximate cost of such repair of non-warranty drive, we will quickly understand why we should call this type of bad sectors as irrecoverable). We can also refer to physical bad sectors the emergence of which is caused by malfunction of electronic or mechanical part of drive, for example, heads failure, serious mechanical damages as a result of impact – jamming of coil actuator or disks, disks displacement. The actions here may vary and depend on the specific situation, if, for example, head failure (such bad sectors appear because the system attempts to access surface that cannot be accessed (which doesn’t mean that something is wrong with the surface), it can be turned off (or it can be replaced in the environment of specialized service centres, the only think that can make you think about reasonability of such operation is the cost (in the majority of cases the answer is negative), if, of course, it is not all about critical information (but this is another story:). In general, this kind of damage is catastrophic. I.e. as we see, physical bad blocks are not recoverable, only some ‘relief’ of their presence is possible. The situation with logical bad blocks is much simpler. Some of them are recoverable. In the majority of cases they are caused by read errors. We can outline the following categories of logical bad blocks:

1. The simplest case: file system errors. The sector is marked in FAT as bad but in reality is not. Previously such method was used by some viruses when they had to find an ivy bush on a drive not accessible by standard tools. This method is not relevant any more because it is not difficult to hide several megabytes inside Windows (even several dozen megabytes). In addition to that, somebody might have just played a prank on a naive user (I’ve seen such programs :). In a broad sense, file system is a vulnerable thing; it is cured easily and absolutely consequence-free.

2. Irrecoverable logical bad blocks are typical for old hard drives that use recording with identifier fields. If you have such drive then you will probably face them. It is caused by incorrect physical address format recorded for the sector, checksum error, etc. Accordingly, it is not accessible. In reality they are recoverable, but only at the factory. Since I have already mentioned that nowadays recording technology doesn’t use identifier fields, it means that this type of bad sectors we can consider as irrelevant.

3. Recoverable logical bad blocks. Not a rare type of bad blocks, especially on some types of drives. They are primarily obliged with their origin to write errors. It is not possible to read from such sector because usually an ECC code in it does not correspond to data and writing is usually not possible because a preliminary check-up of area-to-be-written is carried out before writing, and since it already has problems, writing to this particular area is rejected. In other words, you cannot use the block despite the fact that occupied by it physical area is in perfect order. Defects of such kind can be sometimes caused by errors in drive microprocessor, can be triggered by software or technical reasons (for example, power interruption or fluctuation, head moves during writing to unacceptable height, etc.) But if you manage to harmonize sector contents and its ECC code, then such bad blocks disappear without leaving a trace. This procedure is not difficult and tools that can help are widely available and are overall harmless.

4. Bad blocks of this type appear on drives due to peculiarities of manufacturing technologies: there is no two absolutely identical devices, some of their parameters definitely differ. When drives are prepared in the factory, each gets a set of parameters that ensure the best functioning of the particular specimen, so-called adaptives. These parameters are saved and in case for some mysterious reason they are damaged, the result of such damage could be the drive failure, unstable operation or a large number of bad sectors appearing and disappearing in one or another part of the drive. You can’t do anything with it at home but it can be set up and adjusted at factory or service centre. So, we clarified the causes behind bad blocks emergence. We understood that this phenomenon is not the most pleasant. And, traditionally, one fights the troubles he/she faces. That is why today we are going to talk about what tools to use, how and why you should do it.

File system errors.

First and the simplest type of error that we are going to cure is file system errors. As it has already been mentioned it is only a mistakenly marked sector in the file system. The conclusion suggests itself – it has to be marked correctly.

Method No 1: logical reasoning suggests that we need to create a normal file system on the disk. Such tool is available to anyone within operating system and is called format. Boot in MS-DOS and perform full (and only full) disk formatting (command format x: /c, x – disk with incorrect FAT, c – key launching check of clusters marked as damaged). Fast formatting won’t work here because it only clears the header and keeps the information about bad blocks. Formatting can be performed from Windows as well, but as for me methods of its work still remain a mystery and sometimes the result is unpredictable (I faced such results when the status of bad was wiped off of the physically damaged sectors which leads to even more serious problems. It seems that Windows simply dumps defect status in FAT without going into details, not always though). Such thing was not observed with an ordinary format. This method is simple and open, but its disadvantage is full destruction of information on disk. Yes and if the disk contains a small number of sectors, it’s like shooting at sparrows from a cannon.

Method No 2 consists in purchasing Power Quest Partition Magic that has a Bad Sector Retest function. It will check only marked bad sectors and leave the information on disk untouched. More sophisticated users can use Norton Disk Editor or any other disk editor and manually mark/unmark the necessary sectors. You can write a program for this purpose yourself as well. But there is not need. How to find out that a bad block you have is of this type? No way. You can only try (if you are not confident I recommend using the first two methods because the last two methods can put into operation bad or unstable block which will make the existing problem even worse). If it is this type, it will disappear and will never reappear again. If not – try other methods.

Second type of curable bad clusters is logical clusters whose data do not correspond to ECC. Method of fighting this type of bad clusters is a bit difficult. This type of defects cannot be corrected by software using standard BIOS commands and tools. The thing is that when using such tools before writing to disk, a preliminary check of recording area is performed to make sure that it is alright, and since there is an error, writing is rejected (such check is not only a waste because data will not be recorded immediately, it will be recorded only during the second run (apparently it is one of the reasons why write speed is usually slightly lower than the read speed). It seems more logical to perform write with verification instead of such mechanism. At the same time you can perform write and verification by reading in the head stack assembly which would give a guarantee of correct data recording. And, in principle, even if it will not exclude emergence of bad sectors under discussion, then, anyway it will considerably lower it because in case of detected error you could repeat recording. Even though we have penetrated slightly deeper in the subject matter, it still didn’t become easier as we clarified that it cannot be corrected with standard tools. Non-standard tools are the programs accessing the storage device not via OS and BIOS functions but via input/output ports. In fact, there are many programs like that and in the majority of cases they perform forced recording of some content to sector (usually zeroes), and the storage medium calculates and writes ECC. Having done that, you need to perform check by reading sector and if there is no error than its good, the sector is in a condition we expected it to be and it was successfully cured. Alas – it is not as good… Apparently, if its not FAT error and it wasn’t cured, it is probably of a physical nature. Tools performing such function are wdclear, fjerase, zerofill, and DFT. In most cases such tools are sophisticated because they do not utilize some specific function of storage medium. Working with them doesn’t require special skills as well. Usually such zeroers are distributed on manufacturer web-sites as low-level format programs, although they bear no relation to it. Manufacturers recommend using them in case of problem before contacting service centre. If we exclude information destruction, then they are harmless.

Aside from hard drive’s manufacturers service programs are also developed by unaffiliated companies and enthusiasts. For example, a free and very useful program is MHDD (simply type in one of the search engines and download), that can help in this situation.The mechanism is as following: write the program to system diskette and then boot from it. Study the state of SMART with external SMART monitor (for example, with free SMARTUDM) and not relying on your memory, save the result in the file. Boot MHDD and initialize the necessary disk by pressing F2. Run erase and aerase in console (they use different algorithms, aerase works slower but sometimes takes care of what erase was not able to take care of, that is why I recommend using erase at first and then in case of failure use aerase). Before commencing the procedure save all the information from the storage medium somewhere else because it will be destroyed (if you have an experience you can zero the necessary part without destroying the rest of the data, but it is assumed that you don't). After completed, check the disk surface - press F4 and in the top line select the necessary operation mode (most probably it will be LBA), press F4 again (you can run SCAN in console). Check for bad sectors presence. Then study SMART indications. If the number of remapped sectors remained the same and bad sectors disappeared, then they were of logical nature and were cured. If not – their origin is not logical. It might well be that you have a question as to why you cannot use as in the previous case format command with /c key, that performs bad sectors check? The answer to it has already been mentioned in this article: this program uses standard BIOS tools and cannot write to bad sectors. Apparently, program writers from Microsoft didn’t want to bother themselves with it. Recovery attempt of such sector about which tells the format is only a multiple attempt to read it (no matter how many times it is read, it will not be read and controller already recognized this fact!). Format cannot perform a full check of such bad sector because it cannot write to it. The only thing it is fit for is recovery of bad sectors that are file system errors. I suppose this is the end of the part of article that could be read and used by everybody. All the information written before this was simple and harmless. Hereafter it is not like that at all. Be careful.

Physical damages of HDD

If none of the above methods helped, then most probably we have the most difficult case – physical damage. Such sectors can be hidden or remapped. It is commonly known that hard drives have spare surface. It can be referred to when accessing bad sector, i.e. when it is required to access sector that is bad, it reality a sector from spare surface assigned to replace the bad one will be accessed. There are different methods.

If none of the above methods helped, then most probably we have the most difficult case – physical damage. Such sectors can be hidden or remapped. It is commonly known that hard drives have spare surface. It can be referred to when accessing bad sector, i.e. when it is required to access sector that is bad, it reality a sector from spare surface assigned to replace the bad one will be accessed. There are different methods.

Spare sector method implies location of a sector on each track of the storage device inaccessible in normal mode. In case bad sector was detected, the track has another sector to replace the bad one. Advantage of this method is that it virtually doesn’t affect the performance.

Disadvantage of it is that the capacity of disk is used wastefully because regardless of whether there is a bad sector on the track or not, spare sector is still there. Secondly, it is effective in case of more than one bad sector on track (there are other modifications of this method in which reserve sector is allocated to cylinder, although it doesn’t make them more effective). Spare track method implies presence of a certain number of spare tracks beyond service area. In case of defects on the track, it is excluded from operation and replaced by the spare one. Limitation of method is that the space is used wastefully because even if there is one bad sector an entire track is excluded from operation and replaced by new track. Also, in order to access spare area the head has to make a considerable move that negatively affects overall performance. The method of skipping defect track implies a presence of a certain number of tacks beyond service area. But the way of using it is different. In this method we observe shifting of service area towards centre because during identification of the effective track number its calculated number and number of defects that were detected before it from the defect list is combined. Advantage of this method comparing to previous is the absence of necessity to move to spare area and, accordingly, performance gain. Bad sector skipping method is similar to bad track skipping with the only difference that it is operated by tracks instead of sectors and can be applied only to hard drives using translator. Sector’s physical address is calculated according to the translator table.

First three methods have a number of disadvantages and practically are never used during factory bad sector hiding process (and in view of peculiarities of new hard drives, some methods cannot be applied at all). As rule, the last method number four is used. It allows hiding virtually any number of bad sectors and uses the space efficiently.

Factory hard drive testing aimed at revealing bad blocks is carried out on special equipment under special processing regime. At the end of such process a list of all unusable sectors is prepared. This list is registered in service area where it is being kept for the time a storage device is used. Factory defect list is called P-list (Primary-list). After getting this P-list a translator is formed. Translator establishes correlation between logical sector numbers following continuously and in order and their physical address and at the same time it skips all detected bad sectors and uses the next one after it. This process is called internal formatting and occurs without external participation under hard drive’s program. Apart from factory’s P-list a storage device also has G-list (Grown-list) containing information about bad sectors revealed while in operation. The only thing you can do at home is to remap the revealed defect in spare area with all that it implies (loss of performance).

It would be worth mentioning several reservations about this method. Size of G-list is not large and remap cannot continue to be saved there endlessly: only until G-list has space or until spare surface is exhausted. Also please bear in mind that the more sectors are being remapped, more often positioning in spare area will take place and subsequently the operation of the drive will become slower. Think seriously whether you should do it or not: does a little space loss and a beautiful picture in Scandisk without Bs worth a tangible performance indicators (depending on the number of remaps). Maybe it is better to leave it as it is and enjoy the life. Remapping process is irreversible. If something goes wrong or you are not satisfied with something, you will not be able to recall the changes. If the answer is negative, you need to get one of the following programs: HDD Speed, HDD Utility, or again MHDD. On top of that you will need any SMART attribute viewer: there is such in HDD Speed, but you can as well take a separate program (SMARTUDM). It is assumed that you have already tried to cure logical bad blocks, you failed and now we try to hide physical ones.

Lets study again an example of MHDD. Mechanism of actions would be almost the same as previously. Having booted from the diskette we examine the state of S.M.A.R.T. Then start MHDD and select the drive required. It is not required to save the drive’s information (though it can be done), because it won’t be destroyed. Initialize the drive by pressing F2. Then press F4 and select the necessary parameter in the top line, LBA or CHS, and then turn on remap function and start disk surface scan by pressing F4 (or by running SCAN in console). Then you need to check for presence of bad blocks. Remapped bad sectors are replaced with [ok]. After the first scan, in which remapping was done, launch another scan. If nothing about remapping was informed, then no need to launch it once again. Having done that, study SMART indications.The following options are possible: remapped sectors indicator increased, bad sectors disappeared – it means that we have achieved what we wanted and bad sectors have been replaced with spare ones; number of remapped sectors remained unchanged, bad sectors didn’t disappear: it can happen due to the following reasons – the nature of bad sectors is not what we assumed or sector cannot be replaced; controller didn’t see that that was really a bad sector (and there is no way to point it out to the controller in user mode, you can just try to hint about it by trying read/write of the necessary sector), G-list is full (it should be visible in SMART indications), the drive defies remapping. In the first case all you are left with is to dig further. If G-list is full, then just put up with non-remappable sectors or contact specialists, who can launch the formatting from inside a drive: in this case the existing bad sectors will be added to P-list, and G-list will be clear. This is the best option because in this case there are no side effects from remapping. You won’t be able to launch it at home and the probability of ditching hard drive is too high in case formatting will be interrupted (drive will be left without translator, it can be helped but still) – or power failure or fluctuation (according to the Murphy’s Law it always happens at the worst possible moment), that is why drive manufacturers try not to give such functions to ordinary user. If the drive doesn’t yield to remap at all, nothing can be done about it, but if remap function is turned off in itself, then simply turn it on with vendor tool (you can find it on manufacturer’s website). The majority of users who have heard/read something somewhere think of low-level disk formatting as a solution to fight bad sectors.

There is even a legend that this special kind of formatting allows curing bad sectors, and many devoted forums from time to time have question of the following type ‘please tell where can I get a tool for low-level drive, I have bad sectors’. Lets see what it is and whether it is so useful in reality.

Low-level formatting is connected with 50h command of ATA standard, that came from ST506/412 interface. It should perform track formatting with set physical parameters. However, all modern hard drives differ on the low level, because this level is fully developed by manufacturer. Translator hides internal structure and that is why there is no sense in this command. The majority of modern hard drives support it for compatibility. But since its primary function is not relevant any more, therefore they react to it in different ways.

Firstly, the command can be completely ignored.

Secondly, in some old drives the command is able to zap service data areas (apparently this is where rumours about Low Level Format damaging effect).

Thirdly, it can zero all user data and fourthly, it can remap sector which is important for us in the context of this article.

Talks about magic formatting apparently take roots in the fact that sometimes it is possible to cure logical bad sectors or remap physical ones. This is the essence of such formatting-treatment. No more, no less. But we already have the necessary tools. Why look for adventures? I think these are all operations that could be performed by naïve user. In case of some other defect one can think of other ways of treating and eliminating them. For example, if bad sectors appear as one compact block inside the disk or at the beginning of it, you can divide it in such a way that they would constitute inaccessible partition, or in case bad sectors are at the end, you can use special programs (the same MHDD, for example), or cut the drive’s tail: capacity will shrink but at the same time bad sectors will be removed from operation; in case of bad sectors caused by head crash, it can be simply disconnected (although this is not user operation). Generally speaking, the space for creativity outpour is huge. But try not to indulge too much and do not forget that sometimes it is better to contact specialists.

A bad block is a specific area on the disk that cannot reliably hold data. Such area can contain different information. It could be user data or service information (aka servo – most probably from Latin servire and English serve – to deliver or present), and in such case it is rife with consequences, the severity of which varies between very wide limits, although, the best option would be the absence of any information in this area (the truth is that in all probability you won’t face bad blocks in such areas). Emergence of such sectors can be provoked by multiple reasons and it is treated depending on the requirements of each specific case. But first things first. Now we will bust some very common myths.

A bad block is a specific area on the disk that cannot reliably hold data. Such area can contain different information. It could be user data or service information (aka servo – most probably from Latin servire and English serve – to deliver or present), and in such case it is rife with consequences, the severity of which varies between very wide limits, although, the best option would be the absence of any information in this area (the truth is that in all probability you won’t face bad blocks in such areas). Emergence of such sectors can be provoked by multiple reasons and it is treated depending on the requirements of each specific case. But first things first. Now we will bust some very common myths.