The first who thought about this task were engineers of blue giant (i.e. IBM) and already in 1995 they have suggested a technology monitoring several critical parameters of hard drive and on the basis of collected data to foresee its failure Predictive Failure Analysis (PFA). This idea was picked up by Compaq and later on created its own technology called IntelliSafe. Seagate, Quantum and Conner also took part in Compaq’s novelty. The created technology was monitoring a number of disk performance factors then compared them with allowable values and reported to the host system in case of any danger. This was a huge step forward if not in increasing the reliability of drives then at least in reducing the risk of data loss. First attempts were successful and showed the necessity to move forward with developing the technology. Only in association of all major manufacturers appeared a S.M.A.R.T technology (Self Monitoring Analysing and Reporting Technology), based on IntelliSafe and PFA (talking about PFA, it still exists as a set of technologies for manitoring and analysis of different IBM servers’ subsystems, including disk sybsystem, the monitoring of latter is based on SMART).

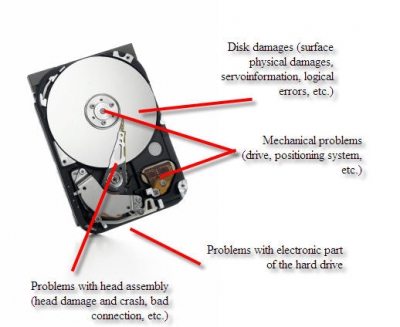

So, SMART. It is the technology of HDD internal status assessment and mechanism for foreseeing possible crash or failure of the hard disk drive. It is important to note that the technology in principle does not solve appearing problems (main of them are displayed in the picture below), it is only capable of warning about the already existing problem or expected one in the nearest future.

It should be noted that the technology is not able to foresee absolutely all possible problems and it is reasonable: electronics failure as a result of power surge, head and surface damage resulting from shock and the like – no technology will be able to foresee such things. Foreseeable problems are only those that are connected with gradual degradation of any specification or component.

Technology development stages

In its development SMART has passed through three stages. First generation included monitoring of a few parameters. No self-activity of drive was envisaged. It was launched only by running interface commands. There is no specification fully describing the standard and, accordingly, there is no precise outline about what kind of parameters should be controlled. Moreover, the definition of parameters and the level of reduction is in the hands of the manufacturers (which is because the manufacturer knows better what is to be controlled in its hard drive, since all drives are too different). And software written as rule by third companies was not heavy duty and could mistakenly report about future crash (the confusion appeared because different manufacturers registered different values for the same parameters). There have been many complaints that the number of cases of detecting pre-crash state is too low (peculiarities of human nature: you want to get everything and at once; no one even thought of complaining about sudden drive failures before SMART was introduced). Situation got even worse because in most cases the minimum requirements for normal SMART functioning have not been met. Statistics shows that the number of foreseeable crashes was less than 20%. The technology at this stage was far from perfect but still was a breakthrough in this field.

There is not much known about the second stage of SMART - SMART II. Essentially same problems have been observed as in case with the first stage. Innovations were the following: possibility of background surface check performed by the drive in automatic mode, error logging, enlarged list of controlled parameters (but again depending on the model and manufacturer). Statistics shows that the number of foreseeable crushes reached 50%.

The modern stage is represented by SMART III. Here we will dig deeper and try to make sense of how it works and what and why we need it or in it.

We already know that SMART monitors main key features of the drive. These features are called attributes. Necessary for monitoring parameters are determined by the manufacturer. Each attribute has a certain value. Usually it is measured within the range form 0 to 100 (though it can be within the range up to 200 or 255), its value is the reliability of a certain attribute relative to some reference value (determined by the manufacturer). High value indicates that there are no changes of this particular parameter or, depending on the value, about slow worsening. Low value indicates about fast degradation or possible soon crash, in other words the higher the value the better it is. Some monitoring programs display Raw or Raw Value, this is the attribute’s value in internal format (that also varies from model to model), the format in which it is kept on the drive. It is less informative for ordinary user and calculated from it value is of more interest. Each attribute gets a bottom possible value under which it can guarantee flawless operation of the device – the so-called Threshold. If attribute’s value drops lower than the Threshold there are high chances of upcoming failure or crash. Another thing to mention is that attributes can be critical and not critical. If a critical parameter drops below the threshold it means crash, if non-critical parameter drops below threshold is indicates of a problem but the drive still can maintain its operability (although some performance factors could worsen).

Most frequently observed critical parameters are the following:

Raw Read Error Rate – error rate at data reads caused by hardware part of the drive.

Spin Up Time – time of stack of disks spin up from the state of repose to working speed. When estimating normal value, the practical time is compared with a certain reference value, set at the factory. Not deteriorating not maximal value during Spin Up Retry Count Value = max (Raw = 0) doesn’t tell of anything bad. Time difference from the reference time can be caused by the number of reasons, for example, power supply unit failed.

Spin Up Retry Count – number of disk spin up retries to reach working speed in case first try failed. Zero Raw value (accordingly not maximal Value) indicates about problems in mechanical part of the drive.

Seek Error Rate – head stack positioning error rate. High Raw value indicates about presence of a problem, that can be the following: damage of servo markings, excessive thermal expansion of disks, mechanical problems in positioning block, etc. Permanent high value speaks of good condition.

Reallocated Sector Count – number of sector reallocation operations. In modern drives SMART can analyse sectors for operation stability ‘on the fly’ and in case it recognizes sector as bad, it can reallocate it. Below we will discuss it in details.

The following are non-critical, informative attributes that are monitored:

• Start/Stop Count – full number of spindle starts/stops. Under the guarantee the disk motor can bear only a certain number of starts/stops. This value is selected as threshold. First drive models with rotation speed of 7200 RPM had unreliable motors that could handle only a small number of starts/stops and failed very soon.

• Power On Hours – number of on hours. The threshold value for it is Mean time between failures (MBTF). Considering that usually these accepted MBTF values are practically impossible, it is unlikely that the parameter will ever reach the critical threshold. But even in this case failure is not necessary.

• Drive Power Cycle Count – number of full drive power on/off cycles. According to this and previous attribute one can assess, for example, how many times the drive was used before it was purchased.

• Temperature – clear and simple. It contains the readings of built-in thermal sensor. Temperature has a great affect on drive’s operating life (even if it is within tolerable limits).

• Current Pending Sector Count – it contains the number of sectors pending for replacement. They have not yet been recognized as bad, but reading from them differs from reading the stable sector, so called suspicious or unstable sectors.

• Uncorrectable Sector Count – number of addressing sector errors that have not been corrected. Possible reasons could be mechanical part failures or surface damage.

• UDMA CRC Error Rate - number of errors appearing during external interface data transfer. Could be caused by poor cables, abnormal modes.

• Write Error Rate – shows write error rate. Can serve as a quality indicator for surface and mechanics.

All errors that occur as well as changing parameters are logged in SMART. This possibility has appeared already in SMART II. All log parameters, designation, size and their number are determined by the drive’s manufacturer. What we are currently interested in is the fact of their presence. No details. Information contained in logs is used for condition analysis and projection.

If you do not get into details then the work of SMART is pretty simple – when the drive is on, all errors and suspicious phenomena reflected in relevant attributes are tracked. Additionally, starting form SMART II, many drives have self-diagnostics functions. Launching SMART tests is possible in two modes, off-line – test is performed in background because the drive is ready to receive and execute the command and burst mode under which when command is received testing is completed.

Three types of self-diagnostics tests are documented: off-line data collection, short self-test and extended self-test). The latter two can be performed in both off-line as well as burst mode. The set of tests included is not standardized.

Test timing can vary from seconds to minutes and hours. If you are not addressing the drive and it still makes sound like during normal working load, it seems like it is busy with self-analysis. All data collected as a result of such tests will also be saved in logs and attributes.

Oh, these bad sectors...

Now lets get back to where it all started, i.e. bad sectors. SMART III introduced a function allowing to the user to transparently remap bad sectors. The mechanism operation is quite simple, when sector reading is unstable or reading error, SMART registers it into the list of unstable sectors and increase its current pending sector count. If upon repeated reading the sector will be fine, it will be removed from this list. If not, then upon the first possibility and when the hard drive is not busy, the drive will start surface checking and suspicious sectors will come first in to-check list. If sector will be marked as bad, it will be remapped with sector from spare surface (accordingly, RSC will increase). Such off-line remapping leads to the fact that bad sectors are practically not visible on modern drives by service programs. At the same time, in case there is a high number of bad sectors, the remapping cannot be performed forever. The first limit here is clear – it is the volume of spare surface. Second is not as clear – the thing is that modern drives have two defect lists P-list (Primary, factory) and G-list (Growth, formed when in operation). And in case of a large number of remaps it can happen that there is simply no space in G-list to make a record about new remap. This situation can be detected by high value of remapped sectors in SMART. In this case all is not lost, but this is beyond the present article.

So, using SMART data you can tell what is the problem with no need of taking it to the service centre. There are various technologies-add-ons over SMART, that allow you to determine the drive condition even more precisely and practically for sure the reason behind its malfunction.

You need to keep in mind that buying drive with SMART technology is not enough to be well aware of all problems occurring to it. The drive, of course, can monitor its condition without third party interference, but it will not be able to warn you in case of approaching danger. You need something that will warn you on the basis of SMART data. (regular chain is displayed in the image below).

BIOS could be an option, which, upon booting and if relevant option is on, checks drives’ SMART condition. And if you want to perform a permanent monitoring of hard disk drive condition, you need to use some kind of monitoring program. Then you will be able to track all information in details and in a user-friendly style.

SmartMonitor from HDD Speed working under DOS

SIGuiardian, working from Windows

All these programs worth a separate article. This is what I meant when talking about initial non-fulfillment of necessary requirements during SMART-enabled hard drives operation.

Today I would like to talk about SMART technology and also clarify the issue of appearance of bad sectors during surface checking with special programs and exhaustion of spare surface for remapping.

Today I would like to talk about SMART technology and also clarify the issue of appearance of bad sectors during surface checking with special programs and exhaustion of spare surface for remapping.