That’s just great – bad sectors across the disk. Things look blue and I decided to check SMART data. I have to admit that I didn’t do it for quite a long time.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 062 Pre-fail Always - 0

2 Throughput_Performance 0x0005 100 100 040 Pre-fail Offline - 0

3 Spin_Up_Time 0x0007 192 192 033 Pre-fail Always - 2

4 Start_Stop_Count 0x0012 099 099 000 Old_age Always - 1904

5 Reallocated_Sector_Ct 0x0033 100 100 005 Pre-fail Always - 0

7 Seek_Error_Rate 0x000b 100 100 067 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 100 100 040 Pre-fail Offline - 0

9 Power_On_Hours 0x0012 085 085 000 Old_age Always - 6651

10 Spin_Retry_Count 0x0013 100 100 060 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 965

191 G-Sense_Error_Rate 0x000a 100 100 000 Old_age Always - 0

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 2

193 Load_Cycle_Count 0x0012 071 071 000 Old_age Always - 299688

194 Temperature_Celsius 0x0002 144 144 000 Old_age Always - 38 (Lifetime Min/Max 11/44)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 5

197 Current_Pending_Sector 0x0022 100 100 000 Old_age Always - 1

198 Offline_Uncorrectable 0x0008 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x000a 200 200 000 Old_age Always - 0

And here is our hero: we've got bad sector along with four of its friends. It is sad to load such a large file (though when counting MD5SUM the value was correct), but it is even more sad to lose data – so I immediately made backup copy of important data. A day after I performed full SMART test and it went smoothly. Now bad sector disappeared (for me, not for SMART), so there are six of them now.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 099 099 062 Pre-fail Always - 0

2 Throughput_Performance 0x0005 100 100 040 Pre-fail Offline - 0

3 Spin_Up_Time 0x0007 204 204 033 Pre-fail Always - 2

4 Start_Stop_Count 0x0012 099 099 000 Old_age Always - 1906

5 Reallocated_Sector_Ct 0x0033 100 100 005 Pre-fail Always - 0

7 Seek_Error_Rate 0x000b 100 100 067 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 100 100 040 Pre-fail Offline - 0

9 Power_On_Hours 0x0012 085 085 000 Old_age Always - 6659

10 Spin_Retry_Count 0x0013 100 100 060 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 966

191 G-Sense_Error_Rate 0x000a 100 100 000 Old_age Always - 0

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 2

193 Load_Cycle_Count 0x0012 071 071 000 Old_age Always - 299691

194 Temperature_Celsius 0x0002 171 171 000 Old_age Always - 32 (Lifetime Min/Max 11/44)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 6

197 Current_Pending_Sector 0x0022 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0008 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x000a 200 200 000 Old_age Always - 0

Frankly speaking, I have already started saving some money to buy a new drive and in the meanwhile I decided to clarify why hard drives die.

Articles to which I refer are scientific, so you won’t find there yelps like “Samsung rules and Seagate sucks” or specific recommendations “buy at William’s – come up trumps”. For those who are not familiar with scientific genre I will tell that it is not a recipe, it is rather a food for further reflection. Everything is written in a diplomatic and masked way for the reader to catch main ideas, but don’t expect that the results of long researches will be served to you on a silver platter. It reminds me of a situation when one housewife shares with another housewife the recipe of her special dish: it seems like all ingredients have been mentioned but last special accent that makes dish so tasty will most probably be hidden.

One way or another, I tried to follow the intricacies of authors’ thoughts and finally managed to fish out of there some good stuff.

Reasons, statistics, analysis

So, the article "Failure Trends in a Large Disk Drive Population" throws some light on reasons behind drives failure on Google servers – authors collected data during year and a half period (from December 2005 to August 2006) from almost 100.000 hard drives, SATA and ATA, 5400 and 7200 RPM, with capacity from 80 to 400GB and produced by different manufacturers.

The main thing to which the googlers point is that SMART – is not a panacea against all ills and that considering only SMART data in most cases (individual in particular) won’t yield anything. Most of their drives died at the time when according to SMART they were perfectly fine and did not contain any error messages.

It is also informed that the possibility of drive failure is weakly tied with the loading level. Nut if SMART pours out errors like scan errors, reallocation counts, off-line reallocation counts, and probational counts – it’s a bummer and you better start backing up :-)

Age

A possibility of failure, as it was to be expected, increases with drive’s age, i.e. with the time that it worked. Disks working up to 1 year often die within first three months. Abrupt jump of failure possibility – 2 years.

Manufacturer

It is said in the research that, against conventional wisdom, hard drive failure weakly depends on who manufactured it and depends more on a specific specimen and to a smaller extent on its operating conditions. As proof of that the authors provide a fact that failure data registered by SMART almost do not depend on the disk manufacturer.

Loads

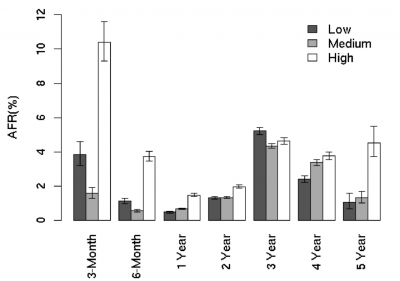

Further they give data concerning dependence of disk death rate on their loading level (i.e. on disk operations).

Exhibit from “Failure Trends in a Large Disk Drive Population", Eduardo Pinheiro, Wolf-Dietrich Weber and Luiz Andre Barroso, Google Inc., Appears in the Proceedings of the 5th USENIX Conference on File and Storage Technologies (FAST’07), February 2007

It turns out that only very new (up to 3 months) and very old (over 3 years) drives most often die due to high loads and in other age categories a chance of failure due to load depends weakly.

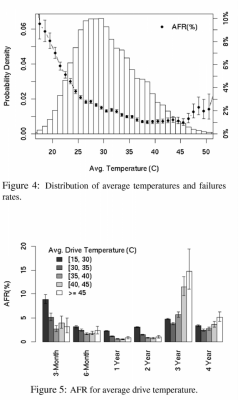

Temperature

It has been argued that temperature is the most important factor for hard drive and that it is better to cool drives. Main thing here is not be careful: drive’s temperature lower than 15 degrees Celsius doubles average drive failure rate.

Exhibit from "Failure Trends in a Large Disk Drive Population", Eduardo Pinheiro, Wolf-Dietrich Weber and Luiz Andre Barroso, Google Inc., Appears in the Proceedings of the 5th USENIX Conference on File and Storage Technologies (FAST’07), February 2007

Googlers found out that the risk of failure increases slowly as drive’s temperature increases – worse than that, there is a trend towards the fact that low temperatures are more detrimental to disks. Interestingly enough, minimum failure risk is observed within the temperature range between 36 to 45 degrees. Failure risk at temperatures below 25 degrees is almost double of 45 degree rate and quickly increases as temperature lowers.

Younger than 2 yearі drives die due to cold (within temperature range between 15 to 30 degrees), and older drives (from 3 years) die due to overheating (over 45 degrees).

SMART data analysis

Most important errors you should pay your attention at: Scan Error, Reallocation Count Offline reallocation Probational Count

Scan Error

Disk electronics from time to time scans surface and gives the data to SMART – if there are bad sectors, they, as rule, will soon be replaced onto free ones. However, googlers tell that: after first surface scan error chance of failure within next 60 days increases almost by 40-fold!

Reallocation Count

If during information reads system throws input-output errors, such errors are trapped by SMART and bad sector is replaced by normal one from the set of available sectors. Reallocation count reflects surface wear, yet it is not reason to blow the whistle: around 90% of google hard drives have different from zero reallocation count and at the same time annulized fault rate (AFR) increases 3-6 fold. After first reallocation of bad sector a chance of failure within the next 60 days increases 14-fold.

Other errors (including Seek Error) do not contribute much to the general statistical view of drive death rate. Notably, drive failure is poorly associated with the number of start/stop cycles. However if the drive is over 3 years if should be used uninterruptedly because at frequent ons and offs failure chance increases by 2%.

In general, googlers advise not to rely on SMART and its low forecasting power (over 56% of all dead drives did not contain SMART error markings), they also advise to do backups more often, because practice shows that almost no one does it unless there is need.

For afters – most tasty: distribution of error probability according to SMART data. Google drive cemetery contains the following failure distribution:

Errors that were not detected by SMART - 60%

Reallocation Count – approx. 40%

Seek Error - 30%

Offline Reallocation - 28%

Probe Count - 20%

Scan Error - 15%

CRC Error – less than 5%.

It is clear that drives die not due to a single error, often due to at least several. Leaders in the category are bad sectors and seek errors.

Mean time to failure

Second article "Disk failures in the real world: What does an MTTF of 1,000,000 hours mean to you?", discusses in details what is MTTF, or mean time to failure. Statistics is also impressive (around 100,000 devices).

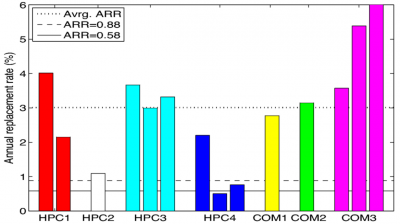

Many manufacturers estimate fail resilience by two interconnected rates: annualized failure rate (AFR) and mean time to failure. AFR is estimated on the basis of forecasts of speed test results, and MTTF is estimated as time of work per annum divided by AFR. Leading drive manufacturers argue that MTTF of their devices is from 1 million hours to 1.5 million hours in accordance with AFR that is 0.58% and 0.88% accordingly.

For curious: it is done because checking drive by turning them on and leaving for 4-5 years is simply not possible because the manufacturer needs reliability data right now, not after 5 years, when these drives will be hopelessly old. That is why so-called speed tests have been developed. During the test devices are being put under knowingly unbearable conditions (extreme heat \ extreme cold and catastrophic loads) and then manufacturers monitor how long their drive will bear it until it fails. It is clear that they die there fast and these data are being extrapolated (i.e. calculated with forecast) onto normal operating conditions. Of course, such forecasting power is not high but it is better than nothing.

Usually master data of such research is strictly protected by company-manufacturer and do not leak out from labs. At the output the numbers obtained from such methods are embellished by marketing department and posted on website in small letters for those who are interested.

Looking for truth in this muddy water is a poor affair and all you are left with is to rely on scientific researches with different degrees of credibility, that are reported from time to time on scientific conferences.

The article reads that their data about drive replacement rate due to failure, say the least, vary from those, posted by manufacturer. Thus, in three data centres from which the data for the article was taken during 5 year period, in general case of drive replacement in connection with failure were somewhat more often that replacement of RAM-cards, 2.5 times more often than replacement of processors and 2 times more often that replacement of motherboards. The fact remains: hard drive failure – one of the most common reasons for data centre downtime connected with equipment replacement.

Further, within the research framework a value of AFR for all data centers covered in the research has been calculated, and here is the diagram:

Diagram taken from: Bianca Schroeder, Garth A. Gibson "Disk failures in the real world: What does an MTTF of 1,000,000 hours mean to you?", FAST ’07: 5th USENIX Conference on File and USENIX Association Storage Technologies.

It’s worth a thousand words: horizontal continuous straight line corresponds to the declared 1.5 million hours of uninterrupted operation, horizontal dashed line – 1 million hours, and dotted line – real average operation time. According to it, AFR accounts for 3% and appropriate MTTF – around 300,000 hours.

But even this number is slightly on the higher side: it is not a secret that data centers do everything to make equipment work longer. These include air conditioning systems, computing load balancing, protection against overheating / over-cooling of separate components, etc. It is unlikely that user machine have the like, it concerns laptops in particular.

These data vary a lot: AFR accounts for 0.5% to 13.6%, and these are for data centres. Last number corresponds to around 7 years of operation, but it is clear that in home environment this number is much lower: constantly changing device temperature, short uninterrupted operation time, power surges, large number of on/off cycles and so on. All these factors affect and reduce the drive’s life cycle.

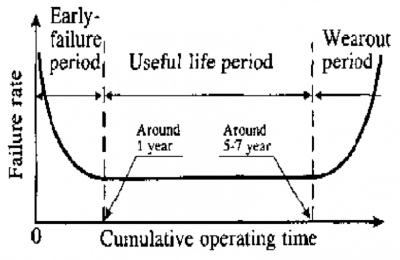

There is another wonderful diagram showing the life cycle of hard drives depending on operating time:

Diagram from J. Yang and F.-B. Sun., "A comprehensive review of hard-disk drive reliability". In Proc. of the Annual Reliability and Maintainability Symposium, 1999.

The authors have named this diagram in a witty way - "bathtub curve" :-)

Interesting conclusions:

1. MTTF declared by manufacturers exceeds by three-fold the real one estimated by data centers.

2. For old drives that worked for 5-8 years, MTTF overestimate by manufacturers is over 30 times.

3. Even for relatively new hard drives (less than 3 years) manufacturer MTTF is 6 times higher the real one.

4. Replacement rate for expensive SCSI drives and ordinary SATA is almost identical.

And further information concerning operation time: for drives whose continuous operation time is not less than 5 years, replacement rate due to failures is 2-10 times higher than MTTF time, and for 8 years and older this replacement rate is 30 times higher.

How to check SMART information

You need to have smartmontools package that contains smartctl tool. After that:

• for IDE-disks write smartctl --all /dev/hda

• for SCSI-disks smartctl --all /dev/sda

• for SATA-disks smartctl --all -d ata /dev/sda

You will see a long table with a lot of interesting information.

smartctl version 5.36 [i686-pc-linux-gnu] Copyright (C) 2002-6 Bruce Allen

Home page is http://smartmontools.sourceforge.net/

=== START OF INFORMATION SECTION ===

Device Model: HTS421260H9AT00

Serial Number: HKA210AJGKHV1B

Firmware Version: HA2OA70G

User Capacity: 60.011.642.880 bytes

Device is: Not in smartctl database [for details use: -P showall]

ATA Version is: 7

ATA Standard is: ATA/ATAPI-7 T13 1532D revision 1

Local Time is: Fri Oct 19 17:12:58 2007 MSD

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

This is the information about hard drive – size, serial number, etc.

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x00) Offline data collection activity

was never started.

Auto Offline Data Collection: Disabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: ( 645) seconds.

Offline data collection

capabilities: (0x5b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 2) minutes.

Extended self-test routine

recommended polling time: ( 47) minutes.

This section contains data about SMART supported tests and how much time they take.

And followed by most interesting part:

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 062 Pre-fail Always - 0

2 Throughput_Performance 0x0005 100 100 040 Pre-fail Offline - 0

3 Spin_Up_Time 0x0007 202 202 033 Pre-fail Always - 1

4 Start_Stop_Count 0x0012 099 099 000 Old_age Always - 1930

5 Reallocated_Sector_Ct 0x0033 100 100 005 Pre-fail Always - 0

7 Seek_Error_Rate 0x000b 100 100 067 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 100 100 040 Pre-fail Offline - 0

9 Power_On_Hours 0x0012 085 085 000 Old_age Always - 6745

10 Spin_Retry_Count 0x0013 100 100 060 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 978

191 G-Sense_Error_Rate 0x000a 100 100 000 Old_age Always - 0

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 2

193 Load_Cycle_Count 0x0012 071 071 000 Old_age Always - 299731

194 Temperature_Celsius 0x0002 196 196 000 Old_age Always - 28 (Lifetime Min/Max 11/44)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 6

197 Current_Pending_Sector 0x0022 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0008 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x000a 200 200 000 Old_age Always - 0

Highlighted lines are the lines to pay attention at. If data in line is zero, it means that there are some errors but it is not a reason for a catastrophe, just a signal to find out the address and tel.no. of the nearest service centre. It is also recommended to perform rescue dump, since it is still possible to do it (to write all the data to new hard drive). Or at least make a copy of /etc and /home.

Error 4 occurred at disk power-on lifetime: 6652 hours (277 days + 4 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

40 51 d6 ee 60 22 e2 Error: UNC 214 sectors at LBA = 0x022260ee = 35807470

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

25 00 00 c4 60 22 e0 00 00:29:58.200 READ DMA EXT

25 00 00 c4 5f 22 e0 00 00:29:58.200 READ DMA EXT

25 00 00 c4 5e 22 e0 00 00:29:58.200 READ DMA EXT

25 00 00 c4 5d 22 e0 00 00:29:58.200 READ DMA EXT

25 00 00 c4 5c 22 e0 00 00:29:58.100 READ DMA EXT

Then it will show errors description, if any. I had input-output error.

It will be followed by data about previously performed tests and their results. If they finished with errors – it is a sign of soon hard drive failure.

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Extended offline Completed without error 00% 6651 -

# 2 Short offline Completed without error 00% 6651 -

# 3 Short offline Completed without error 00% 3097 -

# 4 Short offline Completed without error 00% 806 -

I really hope that this article will help you finding out why your hard drive failed.

Each of us has it. It is literally our everything, a small piece of high-tech and precise mechanics that contains our priceless data: photos, texts, movies, music, configurations and operating system. It is a hard drive, and sooner or later it fails. So why?

Each of us has it. It is literally our everything, a small piece of high-tech and precise mechanics that contains our priceless data: photos, texts, movies, music, configurations and operating system. It is a hard drive, and sooner or later it fails. So why?