RAID storage is a technology that allows to combine multiple physical disks into one, or multiple logical units. Each of these units is shown by the system as a single drive, and the form in which data will be distributed on physical media included in the matrix, depends on its so-called level. These levels are determined as digits located behind the acronym RAID such as RAID 0, RAID 10, etc., and each provides a different balance between the two key objectives of the matrix: increased reliability and security of data and performance such as speed of read / write.

Data Retrieval in Orlando - raid information recovery

The most popular RAID levels are:

- RAID 0 (Stripping) - Connecting to significantly improve the performance of Disk

- RAID 1 (Mirroring) - Disk mirroring (usually limited to two)

- RAID 5 - RAID 0 for enhanced parity data (security)

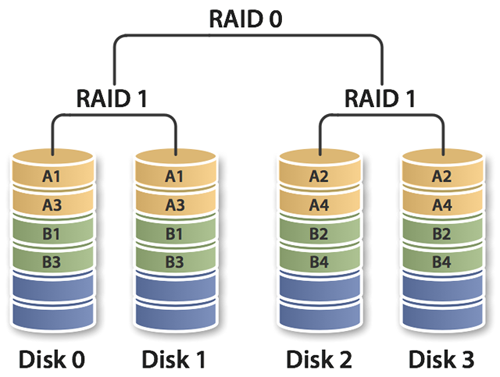

- RAID 10 - Simply: RAID 0 with two RAID 1

In addition to the main division of RAID because of the level, there is a breakdown due to the implementation. Distinguished here are three: software, hardware, and based on the drivers / firmware. RAID software (Soft RAID) as the name implies is implemented as software. Even supported by most operating systems like Windows 7 Professional version allows you to create RAID 0, RAID 1 and JBOD, and the server version also supports RAID 5 The most important advantages of this type of matrix is such standard data format (often open), allowing you to migrate to other systems that support the standard. Additionally, the RAID is independent of the existing equipment and allows the mixing of media standards (such as SATA, SCSI, USB) in a single array. The disadvantages can be demanding levels of performance particularly complex calculations (such as RAID 6), which performs CPU, and the fact that the system boot partition should not (or even can not) be within the matrix.

The inability to boot the operating system software fixes from the matrix in the form of implementation of the controller hardware. Hardware RAID all computations are performed on a specialized processor dedicated to improving its performance. Arrays created on dedicated controllers are completely transparent to the system. Sometimes it allows to combine standard media types, and more expensive models have also used the cache to improve performance. This memory can be protected by battery packs to protect against the loss of stored data in case of power failure. Some controllers can also be upgraded with modules that improve productivity IOPS, which is dictated more and more popular combining the SSD arrays. The defect can specify the data format, which not only can differ from manufacturer to manufacturer, but even from controller to controller, which severely limits the methods to recover data in case of damage to the controller. Another serious drawback is the expense - dedicated controllers are expensive, especially models of cache and battery backup protection.

Latter form of RAID implementation is a kind of mixture of the previous two solutions. Fake RAID as they are popularly called, is present on all kinds of motherboards, but also a dedicated expansion cards cheap "RAID controllers". These controllers, however, are devoid of a dedicated processor, so any calculations as even parity in RAID 5 are performed by the CPU of your computer. Most are ordinary disk controllers retrofitted with a special firmware for managing the corresponding matrices. The operating system for use with this controller requires drivers. All these aspects can be put in the bag defects in this type of RAID. The advantages of this cost in comparison to hardware RAID, and depending on which form of RAID software - greater number of available levels. As an advantage, you can also provide the ability to run the system with such a matrix, of course, if our system will find the appropriate drivers. In the case of payment for more expensive Windows version allows the use of software RAID may be greater than the surcharge to a higher model motherboard supports RAID Fake.

It is also worth mentioning the so-called Hot-Spares, which is used by the RAID hardware and software backup disks. Such carriers are inactive but incorporated into the structure, so that in case of detecting failure of a redundant disk array such as RAID 5 disk damage can be immediately replaced by a spare and the controller can go for immediate reconstruction matrix. Hot-spare drives, depending on the controller can be global, ie used by all arrays created him, or dedicated to a given matrix. An important fact is not confusing array of RAID redundant backups, because even in the fire damage may be all the disks included in the matrix. The only hope in this case the backup is located separately the most in a safe place (ex. on a remote server).

On the following pages we will discuss much of the available RAID levels, and also mention a strictly non-RAID level, JBOD. Then go on to demonstrate how to create, edit and repair the matrix at three different implementations: the program in execution Windows 7 Pro, fake RAID controller in the form of AMD SB950 platform and hardware in the form of a dedicated controller Adaptec 6405. Article finish describing the test platform and performed on the large number of tests in several applications with which we will try to respond to such issues as value buying several smaller paired in RAID SSD versus big brother, or the matrix of the classical hard drives against disk SSD.

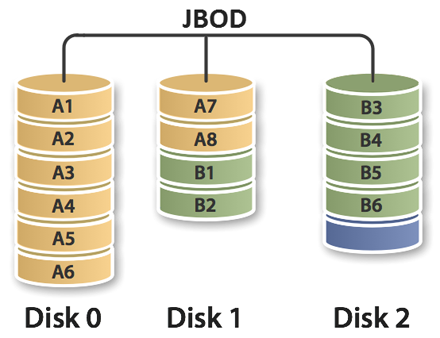

JBOD

JBOD is an English acronym for "Just a Bunch Of Drives" for just a combination of loose discs. This is called a non-RAID architecture that does not ensure or improve the performance of the matrix, or redundancy. JBOD comes down to two concepts: the mapping of individual physical disks and logical partitions on the potential within them to another logical volume and disk combine to create one large volume. It is this latter approach is the more frequently used especially in the controllers on the motherboard. It also has the alternative name of SPAN, BIG or SLED (Single Large Expensive Drive). The general rule of the second approach is sometimes called the reverse of partitioning. Rather than divide disks into smaller logical units combine them into one large. It is particularly useful if you have a lot of small, hard-to-use disk in a different way, which in addition vary in size, which are not suitable for connection in RAID 0 (we will explain why in a moment in the description of the same RAID level). Data combined SPAN / BIG JBOD configurations are placed in sequence on each of the constituent drives. After filling the first data is stored on further to the next.

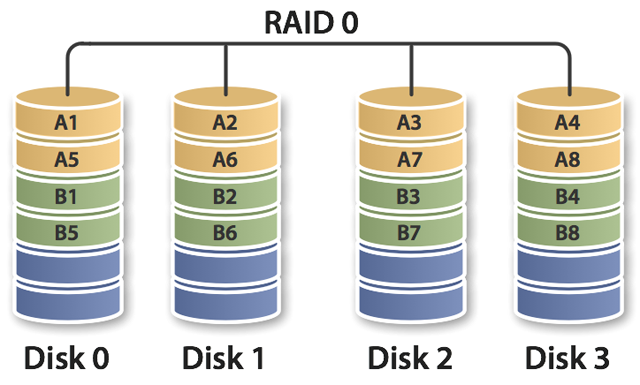

RAID 0

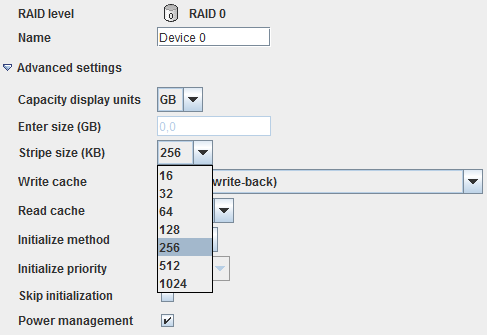

RAID level 0 (RAID 0 data recovery) is also called striped volume (striped volume). The minimum number of disks required for the construction of this matrix is two. The data are divided into strips called "Stripes" and striped alternately on all included in the array disks. Depending on the implementation of the mid-belt may be one of the configuration options. Windows 7 for example, does not allow for the edits, and fake controllers in the motherboard are usually little opportunity to adjust (our test SB950 had a choice of 64KB, 128KB and 256KB). Best in this respect seem hardware solutions (Adaptec 6405 test allows you to set the bar from 16KB to 1024KB). The advantages of RAID 0 to improve performance of read and write, which amounts to at best the product of the slowest performance of the drives in the array and the number of disks included in its composition. There are two drawbacks. Common to most of the restrictions on the RAID level of the same size disk components. Of course, we can use different disks but if for example we have three 2.5GB drives, 10GB and 15GB is the total capacity of RAID 0 would be 7.5GB (smallest drive times the number of discs). The second flaw is unique to RAID 0 It is the lack of any redundancy, and even worse RAID 0 increases the risk of losing data because a single disk failure results in loss of virtually all of the data across the array.

This serious defect zero, which, moreover, at the beginning was not even defined in the RAID documentation is indeed the cause of assigning a zero in its name. A general approximate formula for failure with RAID 0 is 1 - (1-r) n, where r is a single disk failure (usually assumed to be identical for all components and independent disks between them), and n is the number of disks in the array. Chance of damage to the matrix of the two disks is so close to double the single disk. After adding the fact that data on the RAID 0 is stored in the form of strips which is very difficult for them to save in case of failure, it can be concluded that RAID 0 is suitable only where performance is important, but do not care about data security, or would these data we secure backups.

Size bar in theory can be as small as a 1B, but in most solutions is a multiple of the size of a single disk sector, which is classic 512B HDD hence professional solutions allow you to set the "strip" from 1KB up. Size bar is one of the key aspects for the performance of RAID 0 array The smaller the size of the stripe are stored in a single bar on one drive in the array, and the larger are divided into pieces the size of the bar and alternately stored on all disks in the array. In one bar can be only one file, which is associated with a significant loss of space if we choose too large a stripe for our data. The access time in RAID 0 depends on the form in which we refer to the data. When reading / writing large (larger than a single bar) of all drives navigate to the same place, hence the access time is the same as single disk. However, for smaller files (ex. database), each of the drives can search independently, assuming even distribution of data can reduce the access time by half (to two disk array matrix). Of course, these arguments are based on idealistic assumptions.

Choosing the right size belt for our application is one of the biggest problems when configuring the RAID 0 array General recommended method is to analyze the size of files such as our system partition and evaluate the average size of most of them, and then selecting such a bar would require a single file two (with two disk array matrix) or more strips, so you gain on reading. In fact, it makes up the difference, because when reading many small files at once, they can be read from multiple disks. But it is important not to overdo it in the up or down. Setting the bar for a 16KB array at a store such as movies and photos to worsen rather than improve performance. Typical pictures are around 1-2MB in size, and this means that their read / write controller must operate at several bars. For film, it will be thousands, which is associated with mark-up on the unit of account, which can be so large that it will slow down the work of the controller. Most recommended to 64KB or 128KB for the matrix system and the largest available for a sizeable array that stores the data size.

RAID 1

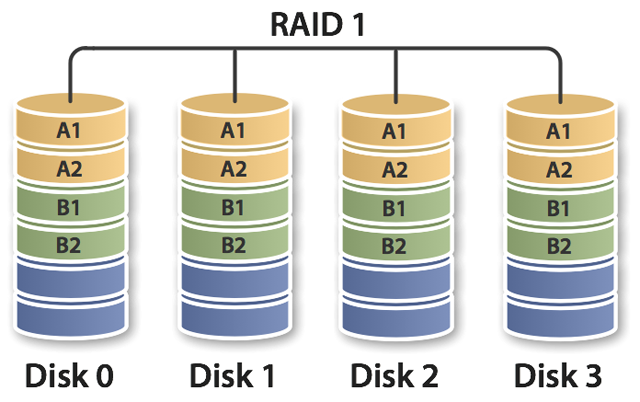

Mirrored array (mirrored volume), and is called the first level, creates copies of data on two or more disks. Most solutions, however, limits the number to two. You can work around this limitation by creating a nested array (eg RAID 1 with two RAID 1 or RAID 11) of which more say in the description of RAID 10 In theory, the matrix multiplication of the first level provides the read performance similar to RAID 0, by reading the data from all components of the disk at a time. In practice, neither Windows 7, either built into the SB950 RAID not allowed, and the Adaptec allow it only when read caching enabled. The recording should be unchanged, or be equal to the amounts for a single disk. Capacity RAID 1 is the smallest size of the carrier component, which can be regarded as the greatest disadvantage. The advantage is almost geometric increase in reliability with each disk in the array, and a very simple implementation. Data is stored in a practically unchanged and a single disk with such a matrix can work independently.

That geometric increase reliability RAID 1, you can specify the approximate formula rn, where r is the reliability of a single disk, and n is the number of disks in the array. How easy it count if, for example, our drives have a 5% chance of damage to the matrix level of the first of two such disks under ideal conditions would be statistical independent 0.25%. But in reality is not as colorful. Most arrays use a twin-drive models, bought, and probably produced in the same time. In addition, hard work in similar conditions, which implies that the chances of damage both in very similar time to grow. The ultimate closer to the real reliability of RAID 1 is reduced to the last chance to damage the smooth disk in the array at which time it will take us to detect failures, replacement of the disk (s) and the reconstruction matrix.

We mentioned at first, that should not be confused with RAID backups. Arrays with redundancy, practically all of the levels of known number from one upwards, protect us from losing data in case of damage to the physical disks. However, we do not protect against data corruption, ex. by a virus, accidental overwriting / deletion / change, or even just simple writing errors. If we want to fully protect our data to RAID give us continuous access to them, because the failure will not block our access, but the full confidence backed security is still necessary.

One of the advantages of RAID 1 (RAID 1 data recovery) is mentioned ease of implementation. This allows not only easy way to mirror / copy to multiply the number of drives but also controllers. There is nothing in the way of building a first-level array in Windows 7 using a two disks connected to two independent controllers such as the built-in chip and additional. This procedure is called fission or would be duplexing (if two-disks matrix) or multiplexing (in the case of multiple drives). This solution prevents us from writing hardware errors. If one of the controller writes data with errors that we still have the second drive plugged into another efficient controller. Unfortunately, viruses and user errors remain a problem. Easy to implement it also gives the advantages of the administration, RAID 1 Since in most cases, damaged, ie only working on one disk array will retain the full capacity of one disk, there is nothing in the way of removing a drive to implement backup. Or you can simply hide the drive in its place to connect a new one. Of course, depending on how it will do synchronization may be needed, the reconstruction matrix.

Hinted in the first paragraph that, in theory, RAID 1 with an independent capability to read data from multiple disks, similar to RAID 0 should multiply the read performance. In addition, the access time to data on two drives should fall by half, and this, as opposed to RAID 0, regardless of the characteristics of that access, so that a full copy of the data that is present on every constituent disk. In practice, many implementations of RAID 1 is able to read the data at once with only one disk, and if they read both, the overhead associated with moving the head to the next sector, except for those already read on the remaining disks can effectively offset any gains in the case of sequential transfer. Correct the record does not exist, because all data must be at the same time saved on hard disks, and no complex calculations in this connection does not slow down. Finally, RAID 1 will have a performance similar to a single drive and will give us the only protection against drive failure for the price of capacity of one disk (in the case of two disk array matrix).

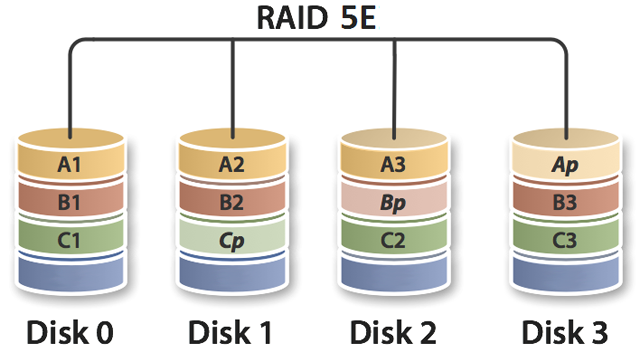

RAID 5

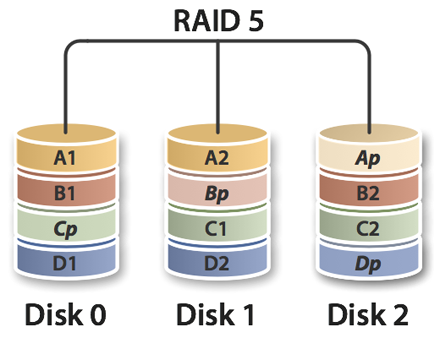

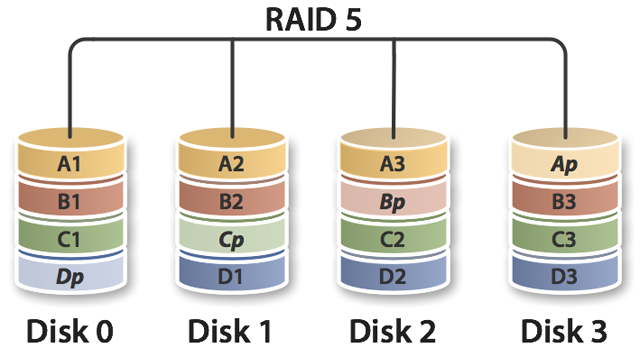

The first more complex levels of RAID, which you'll be RAID 5 (RAID 5 data recovery). This level is similar to RAID 0 is used to share data on the strips, with the difference that additional data are calculated parity. RAID has become popular due to the lowest mark-up to reduce the available space among the available levels of redundant arrays. RAID 5 requires a minimum of three drives and lose their capacity of only one. For comparison, in four-disks array configurations: RAID 1 no longer holds up to three drives, RAID 1E, 5EE, 6, and 10 two, and RAID 5 is still only one. Of course, something for something: RAID 1 is resistant to a configuration of up to three disk failure, RAID 1E, 5EE, 6, and 10 two, and RAID 5, as is easy to guess just one. The data are stored on disk in the form n n-1 bars and one bar parity. In contrast, we not reported RAID 3 and RAID 4, RAID 5, which was replaced, the parity data is distributed across all included in the array disks. Matrix similar to the fifth level of RAID 1 is characterized by asymmetry of performance, ie, the reading is better than a single disk, while the record as the implementation may be even slower. In addition, defective performance matrix can be seen to fall.

RAID 5 for each "line" bars spread across all components of the disk is a block checksum which is the other. If during an active recording on RAID 5 system crashes, it is likely that the parity block is not in accordance with blocks of data. What if undetected before the disk failure, or damage to the unit may result in incorrect data reconstruction. This attribute is sometimes referred to as hole record (write hole). Protection against the most common example is the battery securing the cache. In RAID 5 when overwriting one or more blocks overwriting must also be recalculated checksum. This causes a very large overhead on the controller and drives when working on large numbers of very small files. Each operation is reduced to:

- Read the old data block

- Read the old parity block

- Comparisons of the old block to the write requests per parity

- Saving the new data block

- Saving the new parity block

Therefore, write operations to RAID 5 are quite demanding in terms of operations on the drives and bandwidth between the controller and drives. Furthermore, if the RAID firmware and software RAID all operations checksum calculation are performed on the processor. Parity blocks are not read during a typical reading of the data, since it would require the overhead of a big and overcome any performance gains. These blocks are read, however, when reading data blocks will fail on one of the components of disk arrays. The checksum is then used to rebuild corrupted data. Similarly, in the event of failure of the entire disk parity data are used to rebuild on the fly. This feature is sometimes called the Provisional Reconstruction Mode of Data: The computer is informed of the failure of the matrix to be able to tell this fact to the administrator, but all applications can still read and write data. Of course, this involves a degradation of performance.

Procedure described above read-modify-write give a strongly felt in the write performance of RAID 5, if the operations are performed on files smaller than the stripe size. This is because each parity block such entry must be overwritten with the new checksum. What with the large number of disks means that the same parity block is repeatedly overwritten when saving data to all blocks of data in "line". For this reason, RAID 5 is a miserable performance for random records ex. databases. More advanced implementations of RAID 5 are able to use non-volatile cache, "storing" all the lower orders of record, and then making a complete record of the so-called "line", which is stored all the data blocks and the block converted checksum for them in one fell swoop. Read performance remains at a similar level to RAID 0 with the same number of disks. The form of writing out the parity stripe is virtually the same as RAID 0, and the reason for differences is the overhead associated with bypass blocks of parity.

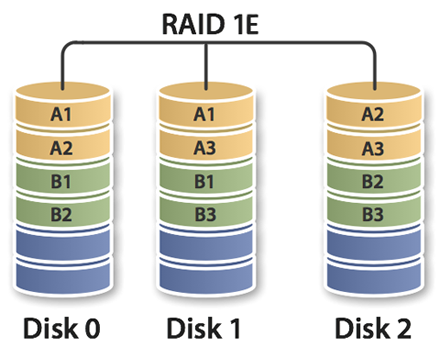

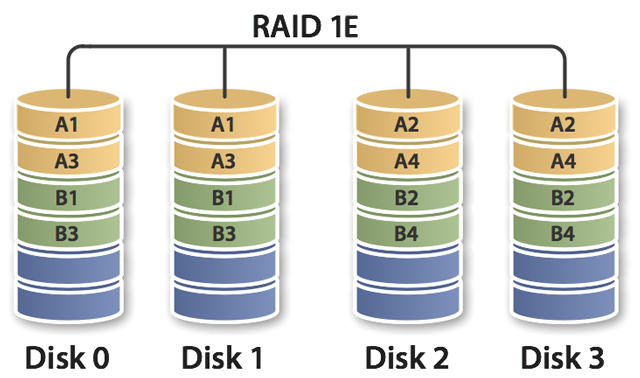

RAID 1E

RAID 1E, where the letter E comes from the word Enhanced is an extended, expanded version of the matrix of level one and belongs to the so-called non-standard RAID levels. RAID This mode is also called striped mirroring (striped mirroring), enhanced mirroring (enhanced mirroring) or duplication of a hybrid (hybrid mirroring). This matrix is a combination of RAID 1 and 0 Data is divided into strips as in RAID 0 and spread across all disks in the array. But for each set of bars falls as in RAID 1 copy of which is recorded with a shift of one block / disc. RAID levels 1E requires a minimum of three disks, and with an even distribution of the number of stripes on the disk is identical to RAID 10 The advantages of array-level 1E is better than RAID 1 performance, though it is mainly thanks to a larger number of disks. This advantage, however, increases if the controller does not support simultaneous reading of several drives in RAID 1, or does so poorly. The defect can be given a higher minimum number of disks needed to build the array, 50% reduction in space for the user, and the fact that a comparable number of disks RAID 1 "endure" more of them fail (three-disks RAID 1E - one RAID 1 - two). Extended mirrored matrix is also practically implemented only in the newer models of hardware controllers.

In theory, 1E-level resistance to the failure of the drives in the case of even their number is similar to RAID 10, that may be damaged half of the disks. As can be seen from our tests is not entirely true, as the Adaptec 6405 injury during a simulation of two of the four drives in the array matrix 1E passes into a state of total failure, regardless of the choice of drives that were removable. For configurations with an odd number of disks become damaged sea (n-1) / 2 drives, where n is the number in the matrix, which for three disk array gives us a disk, and for pięciodyskowej two. Damaged discs can not adjoin each other which reduces the number of possible configurations of multiple disks failure is not restraining the array.

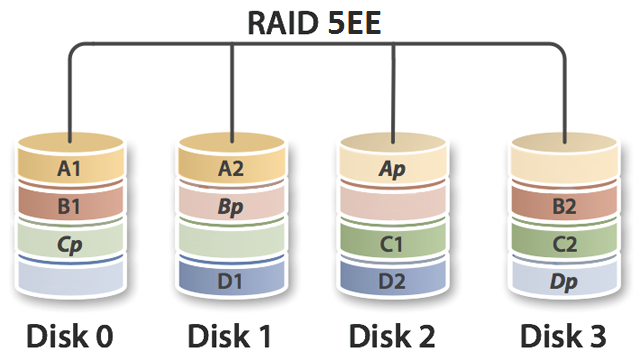

RAID 5EE (RAID 5E)

RAID 5EE is an enhanced form of RAID 5E, although they are considered as equivalent. Both of these matrices expanding array of built-fifth the level in the structure of the hot-spare drive, both are matrices with a custom list of RAID levels. 5E and RAID 5EE and thus require a minimum of four drives. In theory, they are resistant to up to two drives fail. In practice, however, is fairly sizable catch. Drives may fail one by one at a time interval needed for the so-called matrix compression. The point is that if a disk failure in the matrix 5E and 5EE spare disk built into it is used to compress the matrix to the usual array RAID 5 Only then may be failure of the second drive, which is a crash-resistant matrix is the fifth level. Depending on the implementation of RAID 5E/5EE after compensation (ie, after failure of one disk) is now permanently RAID 5, or after replacing the failed drive takes back the expansion of the RAID 5 array 5E/5EE. The latter approach is present in the Adaptec controller being tested. The main advantage of these arrays over RAID 5 is of course built-in "hot" spare drive. The second advantage is greater than RAID 5 performance, but it is burdened with the increased to four the minimum number of disks and a smaller sum available capacity (with the same number of disks in RAID 5).

Both levels of RAID, as already mentioned embed in your spare disk structure. But there is a difference between them. RAID 5E distributes its units at the beginning of all components of disks (like RAID 5 on four disks), leaving at the end of each blank space, which globally is the size of a disk component. This is a built-in hot-spare drive. By spreading strips of four and not three, as in the case of RAID 5 with a regular (or dedicated), a global hot spare, the performance of such a matrix is simply bigger, like RAID 0 on the four disks is faster than that of the three. The fact that all areas of the "working" are on the startup disk may be marginal but yet measurable impact on improving the speed of the matrix, because the slowest drives on a regular HDD areas at the end plates are reserved as a spare. The main decision to make when choosing a RAID 5E/5EE RAID 5 on three drives with dedicated hot spare is to determine whether it is more important for us to yield, or the fact that a dedicated hot-spare drive is at rest and may even be completely disabled by the controller , and thus no wear.

RAID 5E RAID 5EE differs from the fact that the reserve area instead of being at the end of the drive components of the matrix is distributed in the form of bars next to bars, even (as RAID 6, but instead of a second set of parity strips are strips of empty space). This has two implications: compensation of such a matrix is faster than the alternative RAID 5E, mentioned in the previous paragraph to improve the speed of the slowest area with insulation discs in this case is absent. Depending on the implementation of both types of arrays fifth extended permit a maximum of eight to sixteen drives, although the gain of speed and effort I / O operations associated with the compression and expansion of the matrix reduces the maximum useful number to the lower value. In addition, both matrices allow them to create only one logical drive. The last disadvantage of these arrays is their negligible similarly to RAID 1E availability.

RAID 6

The matrix of the sixth level is the highest in the list of standard raids, which will be described. Similar to RAID 5E/5EE RAID 6 is an extended form of RAID 5 arrays The only difference is that instead of the built-spare disk, the matrix has mirrored the level of the sixth parity. Each "line" there are n-2 data blocks, where n is the number of disks in the array, and two parity blocks. A simple checksum which most ordinary (such as RAID 5), and the other much more complicated. >RAID 6 requires a minimum of four drives to work with what volume two is devoted to parity. The biggest advantage of RAID 6 is a resistance to the simultaneous failure of up to two discs. In addition, the matrix is resistant to a second disk failure at a time when the matrix is the process of reconstruction after the failure of the first. What is much safer than RAID 5 and 5E/5EE. All the bars for both the parity is stored on all disks as it is in all levels with the number five in the name.

In general, RAID 6 (RAID 6 data recovery) has several different implementations, and a general definition of this level comes down to: "RAID, which reads and writes to all components of the logical drives can be continued despite the failure of any two disk components simultaneously." Adaptec RAID controller which we were able to test the implemented RAID 6 in the form of block parity (XOR) and the coding block Reed-Solomon correction. An approximate formula for the probability of failure of the sixth level of the matrix determines the formula: n (n-1) (n-2) R 3, where n is the number of disks, and r is the probability of failure of a single component disk array. Similar to RAID 5 array does not have a sixth loss of performance on reading, but a fall on record due to the parity calculation. Well-implemented RAID 6 on a dedicated controller should keep the performance is similar to RAID 5 made up of one disk less. Firmware and software solutions are rare, because the effort of computing the parity of the processor is just too big, and installation of specialized ASICs (Application Specific Integrated Circuit - stands for electronic integrated circuits designed to achieve predefined tasks) is expensive.

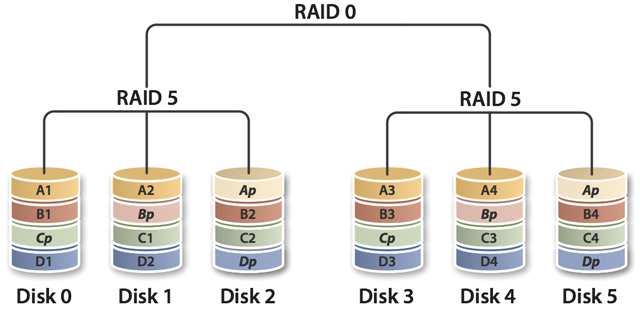

RAID 10 - RAID nested / hybrid

Basic and custom RAID levels are not the end of the array manifold. The next step is often called hybrid levels of nested. What they are? Simply say to one type of RAID combining several copies of a different type of RAID. Generally it is a combination of RAID 0, which is to accelerate its work combined several redundant copies of a matrix such as RAID 1 This provides the smallest number of disks to rebuild in the event of failure of one drive in the array, hence the RAID 10 is preferred over RAID 01 Failure of one disk in the RAID 10 is associated with replacement and reconstruction of only the disk forming a part of one of the RAID 1 However, in a RAID 01 the same situation involves the replacement of one but of the entire reconstruction of a RAID 0 array Nested arrays require more usually double the minimum number of disks (RAID 10 - four, RAID 1 - two) and you can easily create yourself for example, if we want to have RAID 10, and our board or a dedicated controller does not support this level is sufficient to create two RAID 1, and then combine them into a RAID 0 software such as Windows, or if the motherboard / controller hardware allow you to create a matrix with other matrices, it is in doing them. Depending on the resolution of such a matrix can not however be bootable.

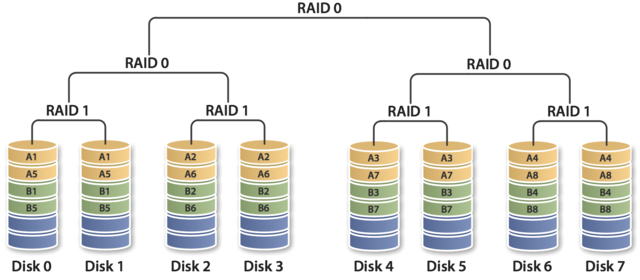

General idea of naming the hybrid matrix is such that the lowest level in the hierarchy of RAID is the first digit, and each is higher each subsequent digit. Naming such a matrix can also be roughly divided into two types: two / multiple digits numbers, or numbers combined with a + or &. And so, two RAID 1 arrays linked via RAID 0 are marked as 1 +0, 1 & 0, or most 10th However, it is known that most of the nested array is limited to two levels are connected together (ex. 01, 10, 50, 60). The exception here is RAID 100 (1 +0 +0) also known as 10 +0 and usually implemented as a software RAID 0 two hardware RAID 10 RAID is similar to RAID 10 made up of four RAID 1, but by spreading the work to a greater number of controllers have better performance of random reads and better resistance to the risk of "hot spot".

Nesting allows the multilevel matrix, in practice, to create giant virtually unlimited array like creating a huge single logical drive. Hybrid arrays also provide better performance than most any single matrix according to independent tests, such as RAID 10 only loses the capacity of RAID 0 (check it in our tests :-)). RAID 10 is indeed the most suitable for applications with intensive operations I / O such as databases, servers, pages or e-mail. Although the field of space, and reliability there is no such advantage. Available to the user capacity of RAID 10 because that is most often used identical disks and RAID 1 that is almost always limited to two drives can be calculated from the formula: nc / 2, where n is the number of disks in a RAID 10, and c is capacity of the drives. RAID 10 is resistant to the failure of all but one drive from each RAID 1 array component, which is a typical four-disk RAID array in either one can fall on one disk. In the event that such an accident will likely crash the entire RAID 10 is the same as single disk.