Sounds good! But only until the files are absolutely identical. If at least a byte of one of identical files will be modified, it will be followed by creation of its new modified copy and efficacy of deduplication will be reduced.

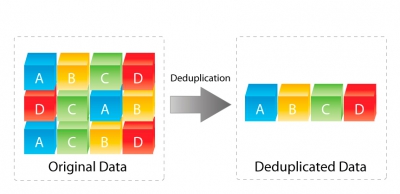

Block-level deduplication works on the level of blocks of data recorded to a disk and hash-functions are used to estimate the identity and uniqueness of such data. Deduplication system keeps hash-table for all data blocks contained in it. As soon as deduplication system finds matching hashes for different blocks, it intends to save blocks as a single copy and a number of links to it. It is also possible to compare data blocks from different computers (global deduplication), that increases the efficacy of deduplication even further, because disks of different computers, with the same operating system, may keep many repeating data. It should be noted that the most efficacy will be achieved in case of reduction of block in size and maximization of data block repeatability factor. In connection with that, there are two methods of block-level deduplication: with permanent (set in advance) and variable (dynamically set for specific data) length.

Deduplication application areas

Many developers of products that support deduplication are concentrated on the backup market. At the same time, with time, backups may occupy two-three times more space than original data. That is why data backup products use file deduplication that, however, may not be enough under certain circumstances. Adding block deduplication may substantially increase the efficacy of using data storage systems and make compliance with fault tolerance requirements easier.

Another way of using deduplication – using it in production system servers. It may be made by means of OS itself, additional software or equipment of data storage system. One has to be cautious doing it, for example, Windows 2008 – OS is said to be able to perform data deduplication, does only SIS. At the same time, data storage system may perform block-level deduplication, showing the file system to user in expanded (original) form, hiding all details related to deduplication. Lets assume that there is 4 Gb of data stored on a data storage system which were deduplicated to 2 Gb. In other words, if a user will address such storage, he would see 4 Gb of data and precisely this volume would be placed to backup copies.

Reduced percentage and high hopes

Percentage of saved space on disk is the most important area which is being manipulated when they say that “95% reduction in size of backup files”. However, the algorithm used for counting this correlation may not be fully relevant to your specific situation. The first variable that should be taken into account is the types of files. Such formats as ZIP, CAB, JPG, MP3, AVI are already compressed data that provide lesser deduplication coefficient. Not less important is a frequency of data change for deduplication and number of archive data. If you are using a product that deduplicates existing data on file server, then you should not worry. But if deduplication is used as part of backup system, then you need to answer the following questions:

- How often does data change?

- Are these substantial changes or only several blocks in a file are changed?

- How often do you backup data and how many files are stored?

It is easy to calculate deduplication on-line with a help of special calculators, but it is not helpful how good will it be in your specific situation. As you might have noticed, the percentage depends on a number of factors and in theory it reaches 95%, though in practice it might reach only several percent.

It is all about time

Speaking about deduplication in data back-up systems we need to know how fast it deduplication is done. There are three main types of deduplication:

- source (on the side of data source);

- target (or “deduplication post-processing”);

- uninterrupted (or “transit deduplication”);

First type: Deduplication on the side of data source

It is performed on the device where initial data is stored. Any data marked for backup are divided into blocks and hash was calculated for them. Here you may note three potential issues.

- First issue is that the resources of the source machine are being used here. That is why you have to make sure that it has just enough processor and RAM resources. There is no reason to perform deduplication on already loaded mail server. Of course, some manufacturers say that their solutions are simple and easy, but this does not eliminate the fact that efficacy of operation of primary medium will be touched and it might be unacceptable.

- Second issue – where to store hash-tables? You may locate hash-tables on the same source server or on a centralized network server (it is needed if global deduplication is applied), however such solution puts additional load on the network.

- Despite its minuses source deduplication has its right to be applied, for example, in companies with small IT infrastructure, where infrastructure has several servers and it would be irrational to use deduplication.

Target (or post-processing) deduplication

Assuming that data from all computers are sent to one backup repository. As soon as data is received, repository can create hash table containing blocks of these data. Primary advantage of this way is a large data volume, and the larger is data pool that larger will be hash-table and, subsequently, the more there are chances to allocate identical blocks. Another advantage is that the process occurs outside production network.

However this option does not solve the problem. There are some moments which should be accounted for.

- First – dependence on free space. If you have a vast infrastructure, then the required space may be huge.

- Another disadvantage of target deduplication has high demands to repository’s disk subsystem. Usually data shall be recorded to repository’s disk before dividing it into blocks and only then hashing and deduplication starts. It makes disk subsystem a bottle neck of architecture.

- Third disadvantage may be hidden in the fact that every hash function has a possibility of hash collision, i.e. situation when same hash is computed for two different blocks of data. It leads to original data damage. To avoid it, one shall select a hash algorithm with minimum possibility of collision, that in its turn requires more computing power. Usually it is not a problem, since target deduplication uses hardware that is able to manage the load. It should be noted that possibility of hash collisions of modern hash functions is quite small.

- Fourth potential disadvantage is that full data volume from ‘production’ shall be transferred via network without creation of substantial load on the network and the production system itself. It may be solved by using night or other less busy hours for system or by isolating the schedule to another network (that is a common practice in middle and large companies).

Transit deduplication

Transit deduplication is a process that occurs during data transfer from source to target. It means that data collected from RAM of target device are being deduplicated there before they are recorded to a disk. Transit deduplication may be viewed as the best form of target deduplication. It has all advantages of global data representation along with off-loading hash process.

However, it is still is a huge network traffic and potential collisions. This method requires the most computing resource (processor and memory) among the aforementioned.